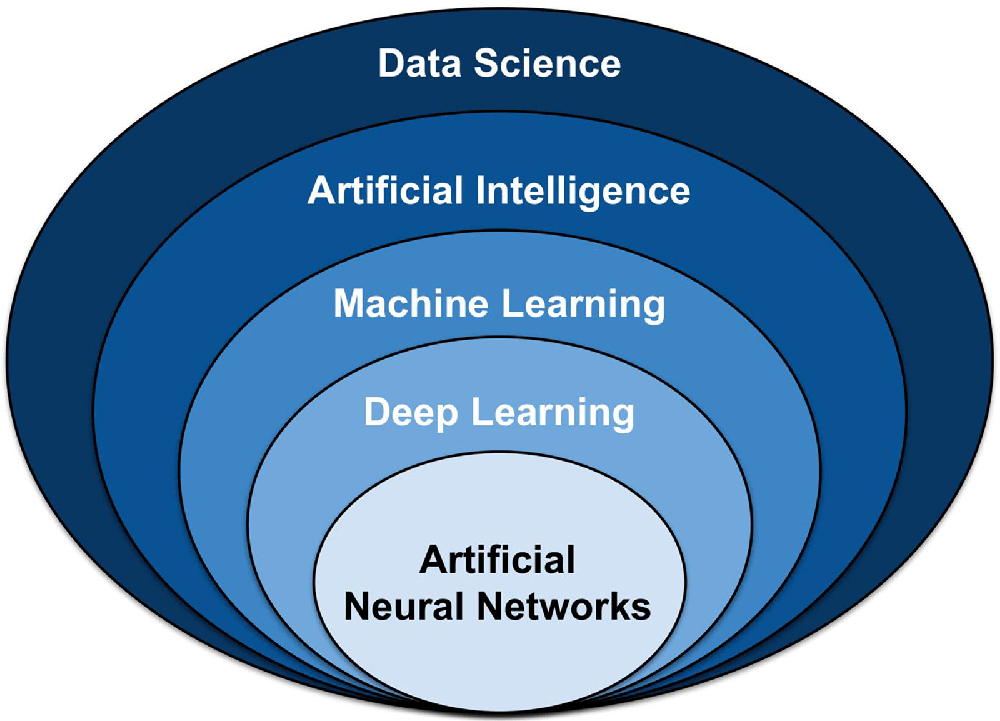

It is important to have a clear understanding of the basic concepts of artificial intelligence types. We often find the terms Artificial Intelligence and Machine Learning or Deep Learning being used interchangeably. Therefore, there is some confusion about what the distinction is between machine learning models and how it is different from AI models.

Artificial Intelligence (AI) Artificial Intelligence (AI) is a subfield within computer science associated with constructing machines that can simulate human intelligence. AI research deals with the question of how to create computers that are capable of intelligent behaviour.

Machine Learning (ML) is a subset of AI associated with providing machines with the ability to learn from experience without the need to be programmed explicitly. In simple words, ML is a part of AI. So while all ML models are, by default, AI models, the opposite may not always be true.

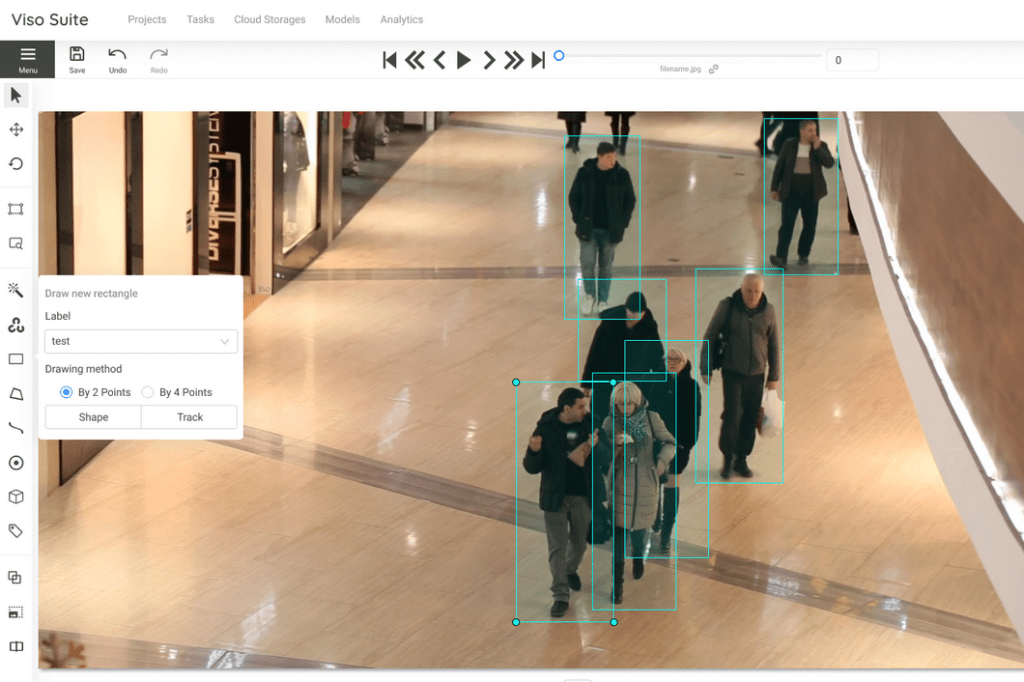

In ML, it’s important to distinguish between supervised vs. unsupervised learning, and a hybrid version named semi-supervised learning. In short, supervised learning is where the algorithm is given a set of training data. Supervised models learn from ground truth data that was labelled manually by data scientists. In computer vision, this process is called image annotation. The model uses this data to learn (AI training) how to make predictions on new data (AI inferencing).

On the other hand, unsupervised learning is where the algorithm is given raw data that is not annotated. Here, the algorithm is not explicitly told what to do with it and must learn how to make predictions by itself. This type of ML model is suitable to perform specific tasks on distinct data types, for example, fraud detection or financial analysis, that require identifying a hidden structure in unlabeled data.

Deep Learning (DL)

Deep learning (DL) is a subset of machine learning, which is a subset of artificial intelligence. Deep learning is concerned with algorithms that can learn to recognise patterns in data, whereas machine learning is more general and deals with algorithms that can learn any kind of task.

What Is An AI Model?

In simple terms, an AI model is a tool or algorithm that is based on a certain data set through which it can arrive at a decision – all without the need for human interference in the decision-making process. An AI model is a program or algorithm that utilises a set of data that enables it to recognise certain patterns. Our article AI Analytics the ultimate marketing horizon might satiate ecommerce marketing interest.

This allows it to reach a conclusion or make a prediction when provided with sufficient information, often a huge amount of data. Hence, AI models are particularly suitable for solving complex problems while providing higher efficiency/cost savings and accuracy compared to simple methods.

What Is An ML Model?

A machine learning model (ML) is a kind of AI model that uses a mathematical formula to make predictions about future events. It is trained on a set of data and then used to make predictions about new data. Some common examples of ML models include regression models and classification models.

What Is A DL Model?

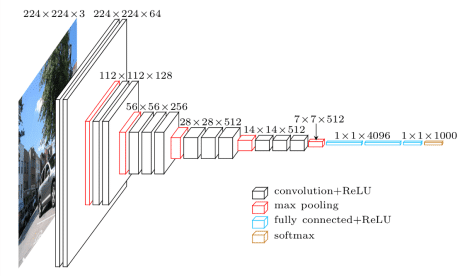

A deep learning model, or a DL model, is a neural network that has been trained to learn how to perform a task, such as recognising objects in digital images and videos or understanding human speech. Deep learning models are trained by using large sets of data and algorithms that enable the model to learn how to perform the task. The more data the model is trained on, the better it can learn to perform the task.

The term “deep” of “deep learning” refers to the fact that DL models are composed of multiple layers of neurons, or processing nodes. The deeper the model, the more layers of neurons it has. This allows the model to learn more complex tasks by breaking them down into smaller and smaller pieces. For example, ResNet is a deep learning model for computer vision tasks such as image recognition. It is one of the deepest models currently available, with a version that contains 152 layers (ResNet-152).

YOLO, or “You Only Look Once,” is a deep learning model for real-time object detection. Surpassing YOLOv4 and YOLOR, the latest version, YOLOv7, is super fast and very accurate, the current state of the art for several AI vision tasks.

Deploy an AI model

To deploy and run an AI model, a computing device or server is needed that provides a lot of processing power and storage. ML frameworks like TensorFlow, or PyTorch let you run an AI model with a few lines of code.

While prototyping is simple, the management of AI pipelines and computing resources at scale is very complex and requires sophisticated infrastructures. This is why most AI projects fail to move beyond the PoC phase. There is a range of AI hardware suitable for different tasks. Graphics Processing Units (GPU) are widely used for training and inference workloads (NVIDIA Jetson). Central Processing Units (CPU) are used primarily for inference, but also training workloads (e.g., Intel Xeon). Coprocessors and AI accelerators include Intel VPU, Google Coral TPU, and Qualcomm NPU.

In the early days, the Cloud was the only way to provide sufficient computing resources for AI workloads. Hosted platforms to deploy AI models include Viso Suite, Hugging Face, Google Colab, or Amazon SageMaker. In recent years, a new paradigm called Edge Computing has made it possible to deploy models to the network edge (Edge AI). Running AI models at the Edge made it possible to build real-world applications that are more efficient, private, and robust.

Overview of the most important AI Model types

In the next step, we will look into those AI techniques and highlight their most important characteristics.

1. Large Language Models (LLM)

An LLM, or Large Language Model, is an advanced artificial intelligence algorithm designed to understand, generate, and interact with human language. These models are trained on enormous amounts of text data, enabling them to perform a wide range of natural language processing (NLP) tasks such as text generation, translation, summarisation, and question-answering. LLMs, like Generative Pre-trained Transformer (GPT) – with popular models like OpenAI’s Chat GPT-3.5 or 4, use deep learning techniques, particularly neural networks, to analyse and predict language patterns, making them capable of producing remarkably coherent and contextually relevant text.

2. Deep Neural Networks

One of the most popular AI/ML models, Deep Neural Networks (DNN), is an Artificial Neural Network (ANN) with multiple (hidden) layers between the input and output layers. Inspired by the neural network of the human brain, these are similarly based on interconnected units known as artificial neurons. DNN models find application in several areas, including speech recognition, image recognition, and natural language processing (NLP).

3. Logistic Regression

A very popular ML model, Logistic regression is the preferred method for solving binary classification problems. It is a statistical model that can predict the class of the dependent variable from the set of given independent variables. This is similar to the Linear regression model, except that it is only used in solving classification-based problems.

4. Decision Trees

In the field of Artificial Intelligence, the Decision Tree (DT) model is used to arrive at a conclusion based on the data from past decisions. A simple, efficient, and extremely popular model, the Decision Tree is named so because the way the data is divided into smaller portions resembles the structure of a tree. This model can be applied to both regression and classification problems.

5. Linear Discriminant Analysis

Linear Discriminant Analysis (LDA), is a branch of the Logistic Regression model. This is usually used when two or more classes are to be separated in the output. This model is useful for various tasks in the field of computer vision, medicine, etc.

6. Naive Bayes

Naive Bayes is a simple yet effective AI model useful for solving a range of complicated problems. It is based on the Bayes Theorem and is especially applied for test classification. The model works on the assumption that the occurrence of any particular feature does not depend on the occurrence of any other feature. Since this assumption is rarely true, the model is called ‘naive’. It can be used for both binary and multiple-class classifications. Some of its applications include medical data classification and spam filtering.

7. Support Vector Machines

SVM, or Support Vector Machine, is a quick and efficient model that excels in analyzing limited amounts of data. It applies to binary classification problems. Compared to newer technologies such as artificial neural networks, SVM is faster and performs better with a dataset of limited samples – such as in text classification problems. This is a supervised ML algorithm that can be used for classification, outlier detection, and regression problems.

8. Learning Vector Quantisation

Learning Vector Quantisation (LVQ) is a type of Artificial Neural Network that works on the winner-takes-all principle. It processes information by preparing a set of codebook vectors that are then used to classify other unseen vectors. It is used for solving multi-class classification problems.

9. K-nearest Neighbours

The K-nearest Neighbours (kNN) model is a simple supervised ML model used for solving both regression and classification problems. This algorithm works on the assumption that similar things (data) exist near each other. While it is a powerful model, one of its major disadvantages is that the speed slows down with an increase in the data volume.

10. Random Forest

Random Forest is an ensemble learning model useful for solving both regression and classification problems. It operates using multiple decision trees and makes the final prediction using the bagging method. To simplify, it builds a ‘forest’ with multiple decision trees, each trained on different data subsets, and merges the results to come up with more accurate predictions.

11. Linear Regression

Used extensively in statistics, Linear Regression is a model that is based on supervised learning. The main task of this model is to find the relationships between the input and output variables. In simpler words, it predicts the value of a dependent variable based on a given independent variable. Linear regression models are widely used in various industries, including banking, retail, construction, healthcare, insurance, and many more.

What’s Next?

To sum up, different artificial intelligence models are used for solving different problems, from self-driving cars to object detection, face recognition and pose estimation. Therefore, being aware of the models is essential for identifying the one best suited for a particular task. With the rapid improvement in artificial intelligence adoption, these models are certain to be applied across all industries shortly.