Consumer faith in cybersecurity, data privacy, and responsible AI hinges on what companies do today—and establishing this digital trust just might lead to business growth. The results of recent surveys of more than 1,300 business leaders and 3,000 consumers globally suggest that establishing trust in products and experiences that leverage AI, digital technologies, and data not only meets consumer expectations but also could promote growth.

The research indicates that organisations that are best positioned to build digital trust are also more likely than others to see annual growth rates of at least 10% on their top and bottom lines. However, only a small contingent of companies surveyed are set to deliver. The research suggests what these companies are doing differently.

The incongruous state of digital trust

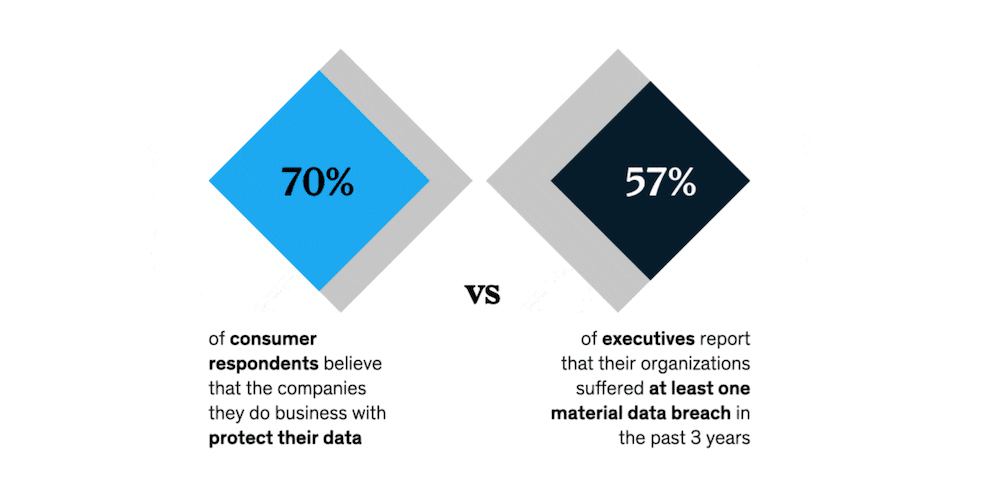

A majority of consumers believe that the companies they do business with provide the foundational elements of digital trust, which we define as confidence in an organisation to protect consumer data, enact effective cybersecurity, offer trustworthy AI-powered products and services, and provide transparency around AI and data usage. However, most companies aren’t putting themselves in a position to live up to consumers’ expectations.

Consumers value digital trust

Consumers report that digital trust truly matters—and many will take their business elsewhere when companies don’t deliver it.

Consumers believe that companies establish a moderate degree of digital trust

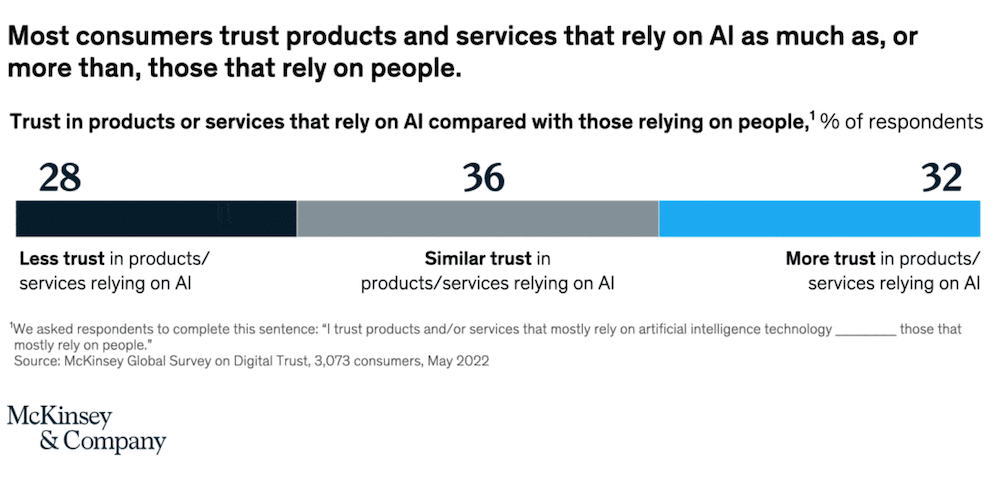

When it comes to how organisations are performing on digital trust, consumers express a surprisingly high degree of confidence in AI-powered products and services compared with products that rely mostly on humans. They exhibit a more moderate level of confidence that the companies they do business with are protecting their data. For organisations, this suggests that digital trust is largely theirs to lose.

More than two-thirds of consumers say that they trust products or services that rely mostly on AI the same as, or more than, products that rely mostly on people. The most frequent online shoppers, consumers in Asia–Pacific, and Gen Z respondents globally express the most faith in AI-powered products and services, frequently reporting that they trust products relying on AI more than those relying largely on people—41%, 49%, and 44%, respectively.

However, these survey results could be influenced, at least in part, by the fact that consumers may not always understand when they are interacting with AI. Although home voice-assisted devices (for example, Amazon’s Alexa, Apple’s Siri, or Google Home) frequently use AI systems, only 62% of respondents say that it is likely that they are interacting with AI when they ask one of these devices to play a song.

While 59% of consumers think that, in general, companies care more about profiting from their data than protecting it, most respondents have confidence in the companies they choose to do business with. 70% of consumers express at least a moderate degree of confidence that the companies they buy products and services from are protecting their data.

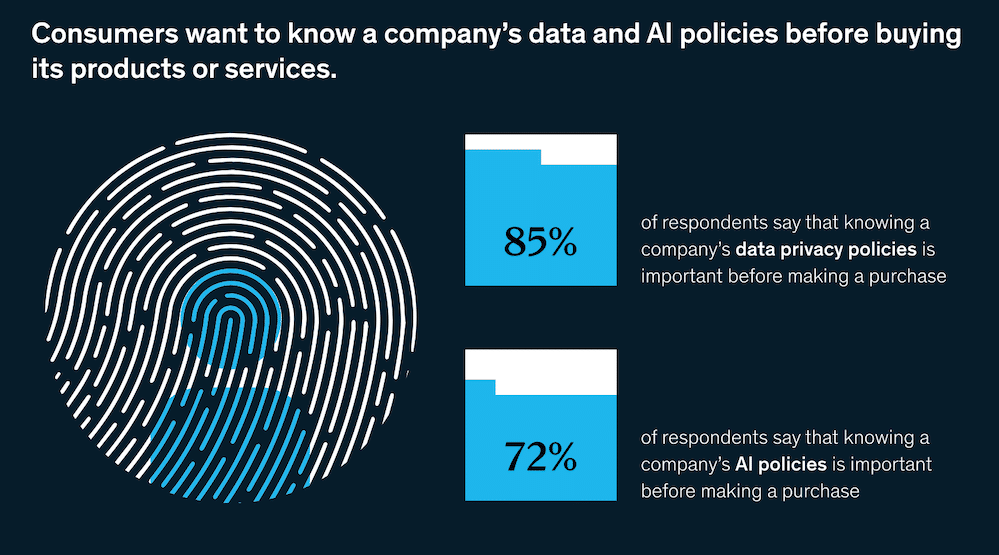

And the data suggest that a majority of consumers believe that the businesses they interact with are being transparent—at least about their AI and data privacy policies. 67% of consumers have confidence in their ability to find information about company data privacy policies, and a smaller majority, 54%, are confident that they can surface company AI policies.

Most businesses are failing to protect against digital risks

Research shows that companies have an abundance of confidence in their ability to establish digital trust. Nearly 90% believe that they are at least somewhat effective at mitigating digital risks, and a similar proportion report that they are taking a proactive approach to risk mitigation (for example, employing controls to prevent exploitation of a digital vulnerability rather than reacting only after the vulnerability has been exploited).

Of the nearly three-quarters of companies reporting that they have codified policies on data ethics conduct (meaning those that detail, for example, how to handle sensitive data and provide transparency on data collection practices beyond legally required disclosures) 60% with codified AI ethics policies, almost every respondent had at least a moderate degree of confidence that those policies are being followed by employees.

However, the data show that this assuredness is largely unfounded. Less than a quarter of executives report that their organisations are actively mitigating a variety of digital risks across most of their organisations, such as those posed by AI models, data retention and quality, and lack of talent diversity. Cybersecurity risk was mitigated most often, though only by 41% of respondents’ organisations.

Given this disconnection between the assumption of coverage and lack thereof, it’s likely no surprise that 57% of executives report that their organisations suffered at least one material data breach in the past three years (Exhibit 3). Further, many of these breaches resulted in a financial loss (42% of the time), customer attrition (38%), or other consequences.

A similar 55% of executives experienced an incident in which active AI (for example, in use in an application) produced outputs that were biased, incorrect, or did not reflect the organisation’s values. Only a little over half of these AI errors were publicized. These AI mishaps, too, frequently resulted in consequences, most often employees’ loss of confidence in using AI (38% of the time) and financial losses (37%).

Advanced industries—including aerospace, advanced electronics, automotive and assembly, and semiconductors—reported both AI incidents and data breaches most often, with 71% and 65% reporting them, respectively. Business, legal, and professional services reported material AI malfunctions least often (49%), and telecom, media, and tech companies reported data breaches least often (55%). By region, AI and data incidents were reported most by respondents at organisations in Asia–Pacific (64%) and least by those in North America (41% reported data breaches, and 35% reported AI incidents).