On 6th February 2024, the UK Government unveiled its long-awaited response to last year’s White Paper consultation on regulating Artificial Intelligence (AI). As expected, the Government’s “pro-innovation” approach, spearheaded by the Department for Science, Innovation and Technology (DSIT), remains largely unchanged from the original proposals.

It is a principles-based, non-statutory, and cross-sector framework. It aims to balance innovation and safety by applying the existing technology-neutral regulatory framework to AI. The UK recognises that legislative action will ultimately be necessary, particularly about general-purpose AI systems (GPAI). However, it maintains doing so now would be premature, and that the risks and challenges associated with AI, regulatory gaps, and the best way to address them, must be better understood.

This approach contrasts with other jurisdictions, such as the EU and to some extent the US, which are adopting more prescriptive legislative measures. This is evidence that, despite international cooperation agreements, divergence in global AI regulatory approaches is more likely.

How does the UK define AI?

There is no formal definition of AI. Instead, an outcomes-based approach, which focuses on two defining characteristics – adaptivity and autonomy – will guide sectoral interpretations.

UK regulators, like Ofcom or the Financial Conduct Authority (FCA), can interpret adaptivity and autonomy to create domain-specific definitions, if beneficial. This raises the risk that different interpretations by regulators could create regulatory uncertainty for businesses operating across sectors. To address this, the Government plans to establish a Central Function to coordinate among regulators, discussed further below.

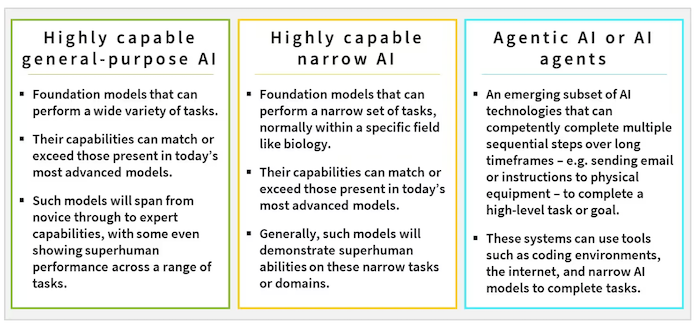

Differentiating different types of advanced AI systems

While the general definition of AI remains unchanged, the final framework does distinguish and provides initial definitions for three types of the most powerful AI systems: highly capable GPAI, highly capable narrow AI and agentic AI (Figure 1). These models are often integrated into numerous downstream AI systems. Large Language Models, which serve as the basis for many generative AI systems, are a prime example of highly capable GPAI.

Figure 1 – Three types of advanced AI systems

Leading AI companies developing highly capable AI systems committed to voluntary safety and transparency measures ahead of the first global AI Safety Summit, hosted by the UK Government last November. They also pledged to collaborate with the new UK AI Safety Institute to test their AI systems pre- and post-deployment.

Securing this commitment was an important achievement and a significant positive step. However, as these systems’ capabilities continue to grow, the Government anticipates that a distinct legislative and regulatory approach will be necessary to tackle the risks posed by highly capable GPAI.

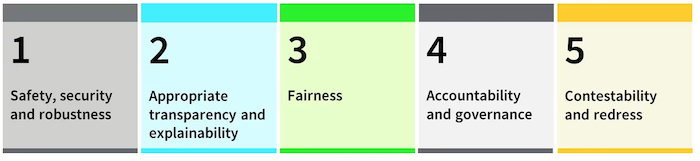

The five principles underpinning the framework

The UK’s final AI framework sets out five cross-sectoral principles for existing regulators to interpret and apply within their own remits to guide responsible AI design, development, and use.

Figure 2 – Five cross-sector principles for responsible AI

The Government’s strategy for implementing these principles is predicated on three core pillars:

- Leveraging existing regulatory authorities and frameworks

- Establishing a central function to facilitate effective risk monitoring and regulatory coordination

- Supporting innovation by piloting a multi-agency advisory service – the AI and Digital Hub

We will examine each of these in detail below.

1. Leveraging existing regulatory authorities and frameworks

As expected, the UK has no plans to introduce a new AI regulator to oversee the implementation of the framework. Instead, existing regulators, such as the Information Commissioner’s Office (ICO), Ofcom, and the FCA, have been asked to implement the five principles as they regulate and supervise AI within their respective domains. Regulators are expected to use a proportionate context-based approach, utilising existing laws and regulations.

Non-statutory approach and guidance to regulators

Despite their crucial role, neither the implementation of the principles by regulators nor the necessity for them to collaborate, will be legally binding. The Government anticipates the need to introduce a legal duty on regulators to give due consideration to the framework’s principles. The decision to do so and the timing will be influenced by the regulators’ strategic AI plans and a planned review of regulatory powers and remits, to be conducted by the Central Function, as we explain below.

Regulators asked to publish their AI strategic plans by April 2024

Yet, there is no doubt that the Government expects individual regulators to take swift action to implement the AI regulatory framework in their respective domains. This expectation was communicated clearly through individual letters sent by DSIT to a selection of leading regulators, requesting them to publish their strategic approach to AI regulation by 30th April 2024. DSIT clarified that the plans should include the following:

- An outline of the measures to align their AI plans with the framework’s principles

- An analysis of AI-related risks within their regulated sectors

- An explanation of their existing capacity to manage AI-related risks

- A plan of activities for the next 12 months, including additional AI guidance

The detailed nature of the request and the short deadline illustrate the urgency with which the Government expects the regulators to act. Indeed, the publication of these plans will be a significant development, providing the industry with valuable insights into regulators’ strategic direction and forthcoming guidance and initiatives.

Firms, in turn, must be prepared to respond to increasing regulatory scrutiny, implement guidelines and support information-gathering exercises. For example, the Competition and Markets Authority has already initiated an initial review of the market for foundation models.

From a sector perspective, regulatory priorities may include addressing deep fakes under Ofcom’s illegal harms duties in the Online Safety Act, using FCA’s ‘skilled person’ powers in algorithmic auditing for financial services, or addressing fair customer outcomes through the Consumer Duty framework. From a broader consumer protection perspective, activities may involve leveraging the Consumer Rights Act to safeguard consumers who entered sales contracts for AI-based offerings, or existing product safety laws to ensure the safety of goods with integrated AI.

The plans will also highlight the areas where regulatory coordination is necessary, particularly regarding any supplementary joint regulatory guidance. Businesses would welcome increased confidence that following one regulator’s interpretation of the framework’s principles in specific use cases – e.g., about transparency or fairness – will not conflict with another. In this context, regulatory collaboration, in particular via the Digital Regulation Cooperation Forum (DRCF), will also play an important role in facilitating regulatory alignment.

Additional funding to boost regulatory capabilities

Responding to a fast-moving technological landscape will continue to challenge regulators and firms. While AI is undoubtedly a key priority, publishing both their strategic plans (by the end of April) and AI guidance (within 12 months) may be challenging for some regulators. In recognition, DSIT announced £10 million to support regulators in putting in place the necessary tools, resources and expertise needed to adapt and respond to AI.

This is a significant investment. Yet, with 90 regulators, determining how to prioritise its allocation and getting the best value for money will require careful consideration. The Government will work closely with regulators in the coming months to determine how to distribute the funds. Increasing the pooling of resources to develop shared critical tools and capabilities – such as algorithmic forensics or auditing – may also be an effective way to make the best use of limited resources.

2. Central function to support regulatory capabilities and coordination

Given the widespread impact of AI, individual regulators cannot fully address the opportunities and risks presented by AI technologies in isolation. To address this, the Government has set up a new Central Function within DSIT to monitor and evaluate AI risks, promote coherence and address regulatory gaps. Key deliverables will include an ongoing review of regulatory powers and remits, the development of a cross-economy AI risk register, and continued collaboration with existing regulatory forums such as the DRCF.

By spring 2024, the Central Function will formalise its coordination efforts and establish a steering committee consisting of representatives from the Government and key regulators. The success of the UK’s AI regulatory approach will rely heavily on the effectiveness of this new unit. Efficient coordination between regulators, consistent interpretation of principles, and clear supervisory expectations for firms are crucial to realise the framework’s benefits.

3. AI & Digital Hub

A pilot multi-regulator advisory service called the AI and Digital Hub will be launched in spring 2024 by the DRCF to help innovators navigate multiple legal and regulatory obligations before product launch. The Hub will not only facilitate compliance but also foster enhanced cooperation among regulators and will be open to firms meeting the eligibility criteria.

The DRCF has also confirmed that, as they address inquiries within the Hub, they will publish the outcomes as case studies. This approach is beneficial in addressing a common frustration raised by observers of similar innovation hub initiatives, where insights gained are not shared with a wider range of innovators, thus limiting the benefits to the wider community. Another crucial step will be to ensure that the insights filter through more quickly into the broader regulatory and supervisory strategies of regulators.

Future regulation of developers of highly capable GPAI systems

The UK’s approach to GPAI has undergone an important shift, moving away from a sole focus on voluntary measures towards recognising the need for future targeted regulatory interventions. Such interventions will be aimed at a select group of developers of the most powerful GPAI models and systems and could cover transparency, data quality, risk management, accountability, corporate governance, and addressing harms from misuse or unfair bias.

However, the Government has not yet proposed any specific mandatory measures. Instead, it made clear that additional legislation would be introduced only if certain conditions are met. These include being confident that existing legal powers are insufficient to address GPAI risks and opportunities, and that the implementation of voluntary transparency and risk management measures is also ineffective.

This approach could lead to some immediate challenges for organisations. For example, DSIT itself highlights that a lack of appropriate allocation of liability across the value chain, other than on firms deploying AI, could lead to harmful outcomes and undermine adoption and innovation.

Additionally, a highly specialised, concentrated market of GPAI providers can make it challenging for smaller organisations deploying GPAIs to negotiate effective contractual protections. In the EU, GPAI providers will be accountable for specific provisions under the EU AI Act and will also need to provide technical documentation to downstream providers. However, in the UK, many measures will only be voluntary for the time being. To mitigate risks, individual organisations should implement additional safeguards. For example, they could require enhanced human review to manage the risk of discrimination if a GPAI provider limits or excludes liability for discrimination, and review contracts with clients to exclude or cap liability where appropriate and legally possible.

As for the other two types of advanced AI models, namely highly capable narrow AI and agentic AI, the Government will continue to gather evidence to determine the appropriate approach to address the risks and opportunities they pose.

International alignment and competitiveness

The UK recognises the importance of international cooperation in ensuring AI safety and effective governance, as demonstrated by the inaugural global AI Safety Summit held last year. The event drew participation from 28 leading AI countries, including the US and the EU, with 2024 summits scheduled to be hosted by South Korea and France.

However, while most jurisdictions aim for the same outcomes in AI safety and governance, there are differences in national approaches. For example, while the UK has been focusing on voluntary measures for GPAI, the EU has opted for a comprehensive and prescriptive legislative approach. The US is also introducing some mandatory reporting requirements, for example, for foundation models that pose serious national or economic security risks. In the short term, these may serve as de facto standards or reference points for global firms, particularly due to their extraterritorial effects. For example, the EU AI Act will affect organisations marketing or deploying AI systems in the EU, regardless of their location.

While there are advantages to adopting a non-statutory approach, it is also important that it is implemented in such a way as to secure international recognition and, where applicable, secure equivalence with other leading national frameworks. To achieve this, it will be important for the UK Government to establish a clear and well-defined policy position on key AI issues as part of its framework implementation, endorsed by all regulators. This will help the UK to continue to promote responsible and safe AI development and maintain its reputation as a leader in the field.

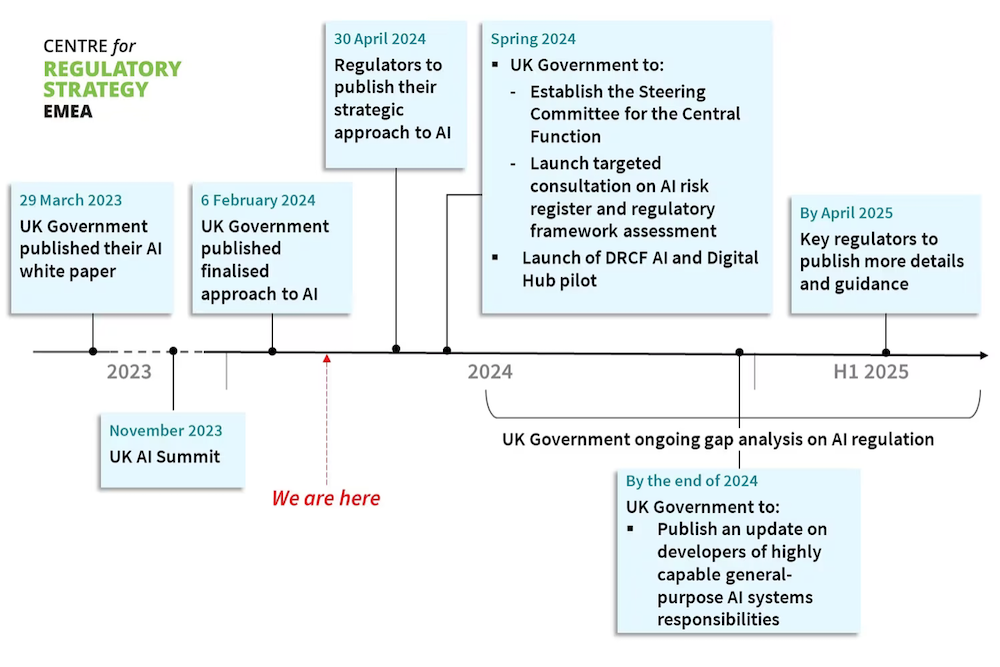

Next steps – timeline of selective actions to implement the framework

The Government has unveiled a substantial array of actions and next steps as part of its roadmap for implementing the finalised AI framework as set out below:

Conclusion

The finalisation of the AI regulatory approach is only the initial step. The challenge now lies in ensuring its effective implementation across various regulatory domains, including data protection, competition, communications, and financial services. Some regulators are already front-running the framework. For instance, the ICO has updated its guidance on AI and data protection to clarify fairness-related requirements.

Given the multitude of regulatory bodies involved, effective governance of the framework will be paramount. The Government’s newly established Central Function will play a pivotal role in ensuring effective coordination among regulators and mitigating any negative impact of divergent interpretations. This will be crucial for providing businesses with the necessary regulatory clarity to adopt and scale their investment in AI, thereby bolstering the UK’s competitive edge.

Footnotes

[1] The ability of AI systems to see patterns and make decisions in ways not directly envisioned by human programmers.

[2] The capacity of AI systems to operate, take actions, or make decisions without the express intent or oversight of a human.

[3] AI systems that are built using other AI systems or components.

[4] The Government has written to the Office of Communications (Ofcom); Information Commissioner’s Office (ICO); Financial Conduct Authority (FCA); Competition and Markets Authority (CMA); Equality and Human Rights Commission (EHRC); Medicines and Healthcare Products Regulatory Agency (MHRA); Office for Standards in Education, Children’s Services and Skills (Ofsted); Legal Services Board (LSB); Office for Nuclear Regulation (ONR); Office of Qualifications and Examinations Regulation (Ofqual); Health and Safety Executive (HSE); Bank of England; and Office of Gas and Electricity Markets (Ofgem). The Office for Product Safety and Standards (OPSS), which sits within the Department for Business and Trade, has also been asked to produce an update.

[5] The DRCF is a voluntary cooperation forum that facilitates engagement between regulators on digital policy areas of mutual interest. It currently has four members: the FCA, ICO, CMA and Ofcom.