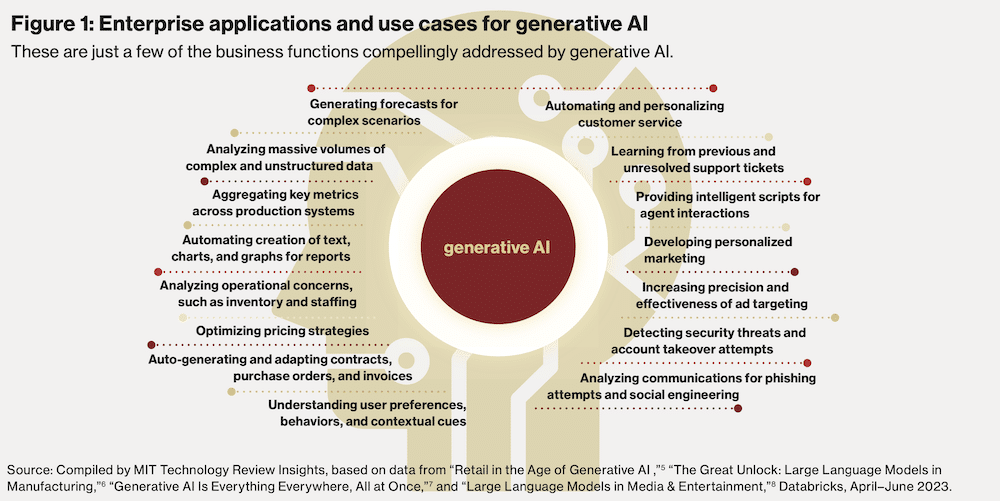

Thanks to its flexibility and range, and user-friendly, natural language–based interface, AI technology is showing its mettle everywhere from copywriting to coding. Its power and potential to revolutionise how work is done across industries and business functions suggests it will have a reverberating impact like that of the personal computer, the Internet, or the smartphone, launching entirely new business models, birthing new industry leaders, and making it impossible to remember how we worked before its spread.

This sudden focus on AI’s power and potential represents a marked shift in how enterprise thinks about AI: until recently, AI adoption was uneven across industries and between functions within companies.

Before the advent of AI technology, only a rare few organisations had made AI a critical capability across the business or even aspired to. While 94% of organisations were already using AI in some way, only 14% of them aimed to achieve “enterprise-wide AI,” defined as having AI being a critical part of at least five core functions, by 2025.

But now generative AI is changing the conversation. Demonstrating applications in every business function, AI is poised to spread enterprise-wide. AI is now even venturing assuredly into creative applications, once considered a uniquely human endeavour. Adobe, for example, has launched Firefly, a family of creative generative AI models that act as a copilot to creative and design workflows, according to Cynthia Stoddard, the company’s senior vice president and chief information officer. Firefly tools can recolour existing images, generate new images, and edit new objects into and out of images, all based on a text description. Another example is SwiftERM, a wholly autonomous email personalisation solution for ecommerce marketing, Selecting for inclusion, only those products with the highest likelihood of imminent purchase by that individual recipient.

The energy and chemical industries are applying AI in domains that had previously been inaccessible. Multi-industrial giant DuPont, for instance, had worked on chatbots for both employee and consumer interfaces previously but found their inaccuracy too frustrating.

“LLMs now can achieve the necessary accuracy and at a faster pace,” says Andrew Blyton, vice president and chief information officer at DuPont Water & Protection. The company is now using AI in production scheduling, predictive reliability and maintenance, and sales price optimisation applications.

Multinational organisations with assets stretching back decades have historically struggled to unify their digital infrastructure. Mergers and acquisitions have resulted in fragmented IT architectures. Important documents, from research and development intelligence to design instructions for plants, have been lost to view, locked in offline proprietary file types. “Could we interrogate these documents using LLMs? Can we train models to give us insights we’re not seeing in this vast world of documentation?” asks Blyton. “We think that’s an obvious use case.” Language models promise to make such unstructured data much more valuable.

Energy giant Shell concurs: “We’ve started to see benefits because a lot of documentation previously in repositories is now starting to come together,” says Owen O’Connell, senior vice president and chief information officer for information digital services and operations. The firm is also streamlining legal, regulatory, and human resources paperwork covering its many jurisdictions, and even surfacing insights from unstructured data in areas like recruitment and performance.

Building for AI

AI applications rely on a solid data infrastructure that makes possible the collection, storage, and analysis of its vast data verse. Even before the business applications of generative AI became apparent in late 2022, a unified data platform for analytics and AI was viewed as crucial by nearly 70% of our survey respondents.

Data infrastructure and architecture cover software and network-related infrastructure, notably cloud or hybrid cloud, and hardware like high-performance GPUs. Enterprises need an infrastructure that maximizes the value of data without compromising safety and security, especially at a time when the rulebook for data protection and AI is thickening. To truly democratise AI, the infrastructure must support a simple interface that allows users to query data and run complex tasks via natural language. “The architecture is moving in a way that supports democratisation of analytics”, says Schaefer.

Data lakehouses have become a popular infrastructure choice. They are a hybrid of two historical approaches— data warehouses and data lakes. Data warehouses came to prominence in the 1980s to systematise business intelligence and enterprise reporting. However, warehouses do not offer real-time services, operate on a batch-processing basis, and cannot accommodate emerging and non-traditional data formats. Data lakes, favoured for their ability to support more AI and data science tasks, emerged more recently. However, they are complex to construct, slow, and suffer from inferior data quality controls. The lake house combines the best of both, offering an open architecture that combines the flexibility and scale of data lakes with the management and data quality of warehouses.

Buy, build? Open, closed?

Today’s CIOs and leadership teams are re-evaluating their assumptions on ownership, partnership, and control as they consider how to build on the capabilities of third-party generative AI platforms.

Over-leveraging a general-purpose AI platform is unlikely to confer a competitive advantage. Says Michael Carbin, Associate Professor, at MIT, “If you care deeply about a particular problem or you’re going to build a system that is very core for your business, it’s a question of who owns your IP.” DuPont is “a science and innovation company,” adds Andrew Blyton, Vice President and Chief Information Officer, DuPont, “and there is a need to keep LLM models internal to our organisation, to protect and secure our intellectual property, this is a critical need.”

Creating competitive risk is one worry. “You don’t necessarily want to build off an existing model where

the data that you’re putting in could be used by that company to compete against your own core products,” says Carbin. Additionally, users lack visibility into the data, weightings, and algorithms that power closed models, and the product and its training data can change at any time. This is particularly a concern in scientific R&D where reproducibility is critical.17

Some CIOs are taking steps to limit company use of external generative AI platforms. Samsung banned ChatGPT after employees used it to work on commercially sensitive code. A raft of other companies, including JP Morgan Chase, Amazon, and Verizon, have enacted restrictions or bans. “We can’t allow things like Shell’s corporate strategy to be flowing through ChatGPT,” says Owen O’Connell, Senior Vice President and Chief Information Officer (Information Digital Services and Operations), Shell. And since LLMs harness the totality of the online universe, he believes that companies will in the future be more cautious about what they put online in the first place: “They are realising, hang on, someone else is going to derive a lot of value out of my data.”

Inaccurate and unreliable outputs are a further worry. The largest LLMs are, by dint of their size, tainted by false information online. That lends strength to the argument for more focused approaches, according to Matei Zaharia, co-founder and chief technology officer at Databricks and associate professor of computer science at the University of California, Berkeley. “If you’re doing something in a more focused domain,” he says, “you can avoid all the random junk and unwanted information from the web.”

Companies cannot simply produce their versions of these extremely large models. The scale and costs put this kind of computational work beyond the reach of all but the largest organisations: OpenAI reportedly used 10,000 GPUs to train ChatGPT.18 In the current moment, building large-scale models is an endeavour for only the best-resourced technology firms.

Smaller models, however, provide a viable alternative. “I believe we’re going to move away from ‘I need half a trillion parameters in a model’ to ‘maybe I need 7, 10, 30, 50 billion parameters on the data that I have,’” says Carbin. “The reduction in complexity comes by narrowing your focus from an all-purpose model that knows all of human knowledge to very high-quality knowledge just for you because this is what individuals in businesses need.”

Thankfully, smaller does not mean weaker. Generative models have been fine-tuned for domains requiring fewer data, as evidenced through models like BERT—for biomedical content (BioBERT), legal content (Legal-BERT), and French text (the delightfully named CamemBERT). For particular business use cases, organisations may choose to trade off broad knowledge for specificity in their business area. “People are really looking for models that are conversant in their domain,” says Carbin. “And once you make that pivot, you start to realise there’s a different way that you can operate and be successful.”

“People are starting to think a lot more about data as a competitive moat,” says Matei Zaharia, co-founder and Chief Technology Officer, of Databricks. “Examples like BloombergGPT [a purpose-built LLM for finance] indicate that companies are thinking about what they can do with their data, and they are commercializing their models.”

“Companies are going to extend and customise these models with their data, and to integrate them into their own applications that make sense for their business,” predicts Zaharia. “All the large models that you can get from third-party providers are trained on data from the web. But within your organisation, you have a lot of internal concepts and data that these models won’t know about. And the interesting thing is the model doesn’t need a huge amount of additional data or training time to learn something about a new domain,” he says.

Smaller open-source models, like Meta’s LLaMA, could rival the performance of large models and allow practitioners to innovate, share, and collaborate. One team built an LLM using the weights from LLaMA at a cost of less than $600, compared to the $100 million involved in training GPT-4. The model, called Alpaca, performs as well as the original on certain tasks. Open source’s greater transparency also means researchers and users can more easily identify biases and flaws in these models.

“Much of this technology can be within the reach of many more organisations,” says Carbin. “It’s not just the OpenAIs and the Googles and the Microsofts of the world, but more average-size businesses, even startups.”

Risks and responsibilities

AI, and particularly generative AI, comes with governance challenges that may exceed the capabilities of existing data governance frameworks. When working with generative models that absorb and regurgitate all the data they are exposed to, without regard for its sensitivity, organisations must attend to security and privacy in a new way. Enterprises also now must manage exponentially growing data sources and data that is machine-generated or of questionable provenance, requiring a unified and consistent governance approach. And lawmakers and regulators have grown conscious of generative AI’s risks, as well, leading to legal cases, usage restrictions, and new regulations, such as the European Union’s AI Act.

As such, CIOs would be reckless to adopt AI tools without managing their risks—ranging from bias to copyright infringement to privacy and security breaches. At Shell, “we’ve been spending time across legal, finance, data privacy, ethics, and compliance to review our policies and frameworks to ensure that they’re ready for this and adapted,” says O’Connell

One concern is protecting privacy at a time when reams of new data are becoming visible and usable. “Because the technology is at an early stage, there is a greater need for large data sets for training, validation, verification, and drift analysis,” observes Schaefer. At the VA, “that opens up a Pandora’s box in terms of ensuring protected patient health information is not exposed. We have invested heavily in federally governed and secured high-compute cloud resources.”

Commercial privacy and IP protection is a related concern. “If your entire business model is based on the IP you own, protection is everything,” says Blyton of DuPont. “There are many bad actors who want to get their hands on our internal documentation, and the creation of new avenues for the loss of IP is always a concern.”

Another data governance concern is reliability. LLMs are learning engines whose novel content is synthesised from vast troves of content and they do not differentiate true from false. “If there’s an inaccurate or out-of-date piece of information, the model still memorises it. This is a problem in an enterprise setting, and it means that companies have to be very careful about what goes into the model,” says Zaharia. Blyton adds, “It’s an interesting thing with ChatGPT: you ask it the same question twice and you get two subtly different answers; this does make science and innovation company people raise their eyebrows.”

Model explainability is key to earning the trust of stakeholders for AI adoption and for proving the technology’s business value. This type of explainability is already a priority in AI governance and regulation, especially as algorithms have begun to play a role in life-changing decisions like credit scoring or reoffending risk in the criminal justice system. Critics argue that AI systems in sensitive or public interest domains should not be a black box, and “algorithmic auditing” has received growing attention.

“It’s pretty obvious a lot is riding on healthcare model explainability,” says Schaefer. “We’ve been working on model cards”—a type of governance documentation that provides standardised info about

a model’s training, strengths, and weaknesses—“as well as tools for model registries that provide some explainability. Algorithm selection is an important consideration in model explainability, as well.”

At Cosmo Energy Holdings, Noriko Rzonca, Chief Digital Officer, considers how to balance strong AI governance with empowerment. “We are developing governance rules, setting them up, and training people,” she says. “In the meantime, we are trying to get something easy to adapt to, so people can feel and see the results.” To strike the balance, she pairs democratised access to data and AI with centralised governance: “Instead of me doing everything directly, I’m trying to empower everyone around and help them realise they have the individual ability to get things done. Thanks to an empowered team, I can be freed up to focus on security and ensure data governance to avoid any type of unfortunate data-related issue.”

The importance of unified governance to manage the risks of generative AI was a common theme in our interviews. Schaefer says, at the VA, “We are seeing the need to have very integrated governance models, integrated governance structures for all data and all models. We’re putting a strong increased focus on very centralized tools and processes that allow us to have an enterprise data structure.” While unified governance has always been a need, generative AI has raised the stakes. “The risk of having non-standardized non-well-defined data running through a model, and how that could lead to bias and to model drift, has made that a much more important aspect,” he says.

Stoddard notes the importance of including a wide range of voices throughout the AI oversight process at Adobe: “It’s important to have the element of diverse oversight through the whole process and to make sure that we have diversity not only of things like ethnicity, gender, and sexual orientation but also diversity of thought and professional experience mixed into the process and the AI impact assessment.” And organisation-wide visibility matters, too. Schaefer adds: “High on our list is getting governance tools in place that provide a visual overview of models that are in development so that they can be spoken to by leadership or reviewed by stakeholders at any time.”

Constitutional AI, an approach currently advocated by the startup Anthropic, provides LLMs with specific values and principles to adhere to rather than relying on human feedback to guide content production. A constitutional approach guides a model to enact the norms outlined in the constitution by, for example, avoiding any outputs that are toxic or discriminatory.

And though the risks they bring to the enterprise are substantial, on the flip side, AI technologies also offer great power in reducing some business risks. Zaharia notes, “Analyzing the results of models or analyzing feedback from people’s comments does become easier with language models. So it’s a bit easier to detect if your system is doing something wrong and we’re seeing a little bit of that.” MIT’s 2022 survey found that security and risk management (31%) was the top tangible benefit to AI executives had noted to date, while fraud detection (27%), cyber security (27%), and risk management (26%) were the top three positive impacts anticipated by 2025.

A powerful new technology like generative AI brings with it numerous risks and responsibilities. Our interviews suggest that a motivated AI community of practitioners, startups and companies will increasingly attend to the governance risks of AI, just as they do with environmental sustainability, through a mixture of public interest concern, good governance, and brand protection.

Conclusion

AI has been through many springs and winters over the last half-century. Until recently, it had made only limited inroads into the enterprise, beyond pilot projects or more advanced functions like IT and finance. That is set to change in the generative AI era, which is sparking the transition to truly enterprise-wide AI.

Few practitioners dismiss generative AI as hype. If anything, its critics are more impressed by its power than anyone. But executives and experts believe that organisations can reap the fruits of the new harvest and also attend to its risks. Commercial organisations and governments alike have to tread a fine line between embracing AI to accelerate innovation and productivity while creating guardrails to mitigate risk and anticipate the inevitable accidents and mishaps ahead.

With these precautions in mind, the most forward-looking CIOs are moving decisively into this AI era. “People who went through the computer and Internet revolution talk about when computers first came online,” says Blyton. “If you were one of those people who learned how to work with computers, you had a very good career. This is a similar turning point: as long as you embrace the technology, you will benefit from it.”

Endnotes

1. “The economic potential of generative AI,” McKinsey & Company, June 14, 2023, https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier#/. 2. “Generative AI could raise global GDP by 7%,” Goldman Sachs, April 5, 2023, https://www.goldmansachs.com/intelligence/pages/generative-ai-could-raise-global-gdp-by-7-percent.html.

3. Jim Euchner, “Generative AI,” Research-Technology Management, April 20, 2023, https://doi.org/10.1080/08956308.2023.2188861.

4. “CIO vision 2025: Bridging the gap between BI and AI,” MIT Technology Review Insights, September 2022, https://www.technologyreview.com/2022/09/20/1059630/cio-vision-2025-bridging-the-gap-between-bi-and-ai/.

5. “Retail in the Age of Generative AI,” Databricks, April 13, 2023, https://www.databricks.com/blog/2023/04/13/retail-age-generative-ai.html.

6. “The Great Unlock: Large Language Models in Manufacturing,” Databricks, May 30, 2023, https://www.databricks.com/blog/great-unlock-large-language-models-manufacturing. 7. “Generative AI Is Everything Everywhere, All at Once,” Databricks, June 7, 2023, https://www.databricks.com/blog/generative-ai-everything-everywhere-all-once.

8. “Large Language Models in Media & Entertainment,” Databricks, June 6, 2023, https://www.databricks.com/blog/large-language-models-media-entertainment.

9. Ibid.

10. Ibid.

11. Ibid.

12. Ibid.

13. Jonathan Vanian and Kif Leswing, “ChatGPT and generative AI are booming, but the costs can be extraordinary,” CNBC, March 13, 2023, https://www.cnbc.com/2023/03/13/chatgpt-and-generative-ai-are-booming-but-at-a-very-expensive-price.html.

14. Josh Saul and Dina Bass, “Artificial Intelligence Is Booming—So Is Its Carbon Footprint,” Bloomberg, March 9, 2023, https://www.bloomberg.com/news/articles/2023-03-09/how-much-energy-do-ai-and-chatgpt-use-no-one-knows-for-sure#xj4y7vzkg.

15. Chris Stokel-Walker, “The Generative AI Race Has a Dirty Secret,” Wired, February 10, 2023, https://www.wired.co.uk/article/the-generative-ai-search-race-has-a-dirty-secret.

16. Vanian and Leswing, “ChatGPT and generative AI are booming,” https://www.cnbc.com/2023/03/13/chatgpt-and-generative-ai-are-booming-but-at-a-very-expensive-price.html.

17. Arthur Spirling, “Why open-source generative AI models are an ethical way forward for science,” Nature, April 18, 2023, https://www.nature.com/articles/d41586-023-01295-4

18. Sharon Goldman, “With a wave of new LLMs, open-source AI is having a moment—and a red-hot debate,” VentureBeat, April 10, 2023, https://venturebeat.com/ai/with-a-wave-of-new-llms-open-source-ai-is-having-a-moment-and-a-red-hot-debate/.

19. Thomas H. Davenport and Nitin Mittal, “How Generative AI Is Changing Creative Work,” Harvard Business Review, November 14, 2022, https://hbr.org/2022/11/how-generative-ai-is-changing-creative-work.

20. “Hello Dolly: Democratizing the magic of ChatGPT with open models,” Databricks, March 24, 2023, https://www.databricks.com/blog/2023/03/24/hello-dolly-democratizing-magic-chatgpt-open-models.html.

21. “Introducing the World’s First Truly Open Instruction-Tuned LLM,” Databricks, April 12, 2023, https://www.databricks.com/blog/2023/04/12/dolly-first-open-commercially-viable-instruction-tuned-llm.

22. “Just how good can China get at generative AI?” The Economist, May 9, 2023, https://www.economist.com/business/2023/05/09/just-how-good-can-china-get-at-generative-ai.

23. “Gen AI LLM—A new era of generative AI for everyone,” Accenture, April 17, 2023, https://www.accenture.com/content/dam/accenture/final/accenture-com/document/Accenture-A-New-Era-of-Generative-AI-for-Everyone.pdf.

24. “The economic potential of generative AI,” McKinsey & Company, June 14, 2023, https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier#/.

25. “Generative AI could raise global GDP by 7%,” Goldman Sachs, April 5, 2023, https://www.goldmansachs.com/intelligence/pages/generative-ai-could-raise-global-gdp-by-7-percent.html.

26. “Claude’s Constitution,” Anthropic, May 9, 2023, https://www.anthropic.com/index/claudes-constitution.

.