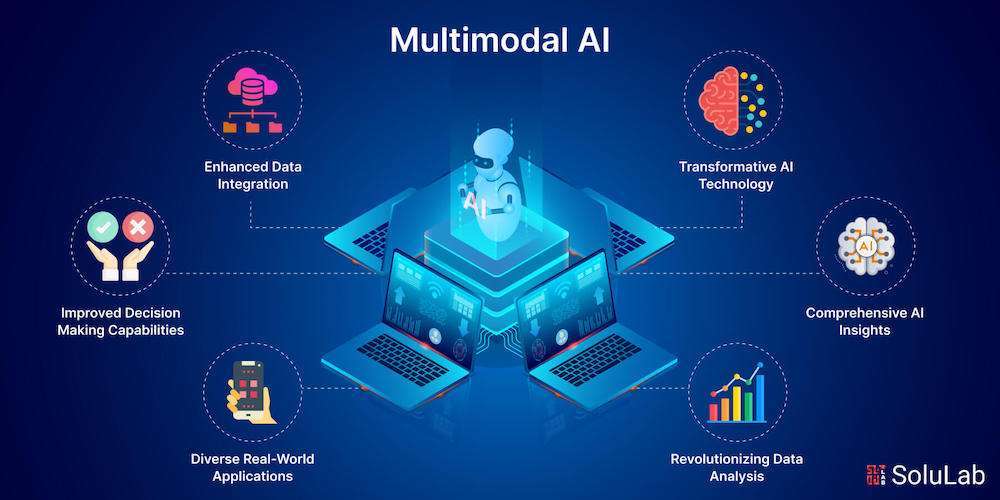

In the constantly changing world of AI, the development of multimodal models represents a major advancement. These models, able to analyse and comprehend information from different formats including text, images, and sound, have great potential to transform various sectors and areas. As we move forward, it’s becoming more and more important to grasp the importance of multimodal models.

They can improve natural language processing and make interactions between humans and computers more intuitive, providing a comprehensive approach to AI that goes beyond the old single–modal systems. In this piece, we explore the basics of multimodal models, looking at their main parts, advantages, uses, and the obstacles they face.

The perpetual development of AI hyper-personalisation of both email and web content product selection for the individual, enables additional sources of information to be integrated for an ever greater understanding and appreciation of each consumer’s unique, personal and immediate needs, wants desires etc.

What is an AI multimodal model application?

Superb complex models in artificial intelligence are systems that can operate across various forms of “modalities.” In the fields of machine learning and artificial intelligence studies, a modality refers to a specific type of data. This can be anything from text, images, videos, sounds, computer code, mathematical formulas, among others.

Currently, the majority of AI systems can only handle a single modality or transform data between different modalities. For instance, the big language models such as GPT–4 are designed to work exclusively with text. They accept a piece of text, perform undisclosed AI operations, and then provide back a textual response. In the areas of AI image recognition and text-to-image models, both operate using two modalities: textual input and visual data.

AI image recognition systems accept an image and return a textual description, whereas text-to-image models take a textual prompt and produce a matching image. When it seems like a language model can handle various modalities, it’s usually utilised using another AI system to convert the non-textual input into text. For instance, ChatGPT employs GPT–3.5 and GPT–4 to enhance its textual capabilities, yet it depends on Whisper for interpreting audio inputs and DALL–E 3 for creating images.

Examples of Multimodal Al Models

Cross-Domain Knowledge Transfer: A significant benefit of using multimodal models is their capacity to apply learned knowledge across various data sets. Consider the domain of business data analysis. A deep learning model that has been trained on the data of one company can be repurposed for another by employing a technique called transfer learning.

This approach not only cuts down on the expenses associated with development but also advantages different departments or groups within an organisation by tapping into common knowledge and skills. Analysing Visual Content: Multimodal deep learning is crucial for activities like analysing images, answering visual questions, and creating captions.

These AI systems are equipped with extensive datasets that include images with annotations, allowing them to understand and interpret visual information with high precision. With improved training, they become skilled at analysing and categorising images that are not part of the training set, making them essential in areas such as healthcare (analysing medical images), ecommerce (identifying products), and self-driving cars (detecting objects).

AI Content Generators: A further striking illustration of multimodal models in practice is found in AI content generators that create content according to given instructions. Historically, these models were only trained in certain areas, which restricted their use. Yet, recent progress in multimodal models has resulted in the creation of adaptable systems that can be used in different areas. These systems can effortlessly combine text and images to create detailed multimedia content, serving a wide range of purposes including content production, advertising, and narrative development.

The most prolific use of an AI multimodal model is Siri and Alexa, where the obvious application is as it listens to your conversation to suggest products when you are next online, pondering imminent purchases.

Key Components of Multimodal Al Models

Feature Extractors: At the core of multimodal models are specialized feature extractors designed for each type of data. For textual data, these might involve methods like word embeddings or contextual embeddings, which are derived from pre-trained language models such as BERT or GPT. In the case of images, convolutional neural networks (CNNs) are frequently utilised to extract visual features, and for audio, techniques such as spectrogram analysis or mel-frequency cepstral coefficients (MFCCs) are used. These feature extractors convert unprocessed data into meaningful representations that encapsulate the core characteristics of each type of data.

Fusion Mechanisms: After extracting characteristics from each modality, the subsequent hurdle is to merge them efficiently. The methods for merging differ based on the structure of the multimodal model, yet they typically strive to integrate data from various modalities in a cooperative way. This can be accomplished using methods like concatenation, adding elements together, or attention mechanisms, in which the model is trained to adjust the significance of features from each modality according to the task’s context.

Cross-Modal Attention: A key feature of sophisticated multimodal models is their capacity to focus on important details from different types of data. Attention mechanisms that span across different data types allow the model to concentrate on certain aspects of one type of data based on signals from another type. For instance, in a task that combines text and images for answering questions, the model might concentrate on particular words in the question while examining specific areas of the image to provide a correct response.

Training Paradigms: One key feature of sophisticated multi-modal models is their capability to focus on important details from different types of data. Multi-modal attention systems allow the model to concentrate on certain elements in one type of data based on signals from another type. For instance, in a task that combines text and images for question–answer purposes, the model might focus on particular words in the question while examining specific areas in the image that are relevant to those words to provide a correct response.

Benefits of a Multimodal Model

Multimodal models signify a breakthrough in artificial intelligence, providing numerous advantages that go beyond the conventional single-modal methods.

Contextual Comprehension: A key advantage of multimodal models is their unmatched capacity to comprehend context, an essential component in different activities like natural language processing (NLP) and producing suitable replies. Through the analysis of both visual and textual information at the same time, these models gain a more profound insight into the fundamental context, allowing for more detailed and contextually fitting interactions.

Natural Interaction: Multimodal models transform the way people interact with computers by making communication more natural and intuitive. Unlike old-school AI systems that could only handle one type of input, multimodal models can easily combine various forms of input, such as spoken words, written text, and visual signals. This comprehensive strategy boosts the system’s capacity to grasp what the user wants and reply in a way that resembles a human-like dialogue, thus enhancing the user’s experience and involvement.

Accuracy Enhancement: By using a variety of data types like written text, spoken words, pictures, and videos, models that combine different modes significantly enhance their accuracy in different activities. By incorporating several modes, these models can achieve a thorough grasp of data, resulting in more accurate forecasts and better results. Additionally, these models are particularly good at dealing with data that is missing or contains errors, using knowledge from various modes to complete the data and fix mistakes efficiently.

Diverse data sources give AI systems improved abilities by allowing them to acquire a more comprehensive grasp of the world and its surroundings. This wider comprehension allows AI systems to tackle a broader spectrum of activities with higher precision and effectiveness. From examining intricate data sets, and identifying trends in multimedia materials, to producing detailed multimedia results, multimodal models open up new opportunities for AI uses in different fields.

Multimodal Al Applications

Multimodal AI, harnessing the power of multiple data modalities, revolutionizes various sectors with its versatile applications:

Visual Question Answering (VQA) & Image

Captioning: Multimodal models seamlessly merge visual understanding with natural language processing to answer questions related to images and generate descriptive captions, benefiting interactive systems, educational platforms, and content recommendations.

Language Translation with Visual Context: By integrating visual information, translation models deliver more context-aware translations, particularly beneficial in domains where visual context is crucial.

Gesture Recognition & Emotion Recognition: These models interpret human gestures and emotions, facilitating inclusive communication and fostering empathy–driven interactions in various applications.

Video Summarisation: Multimodal models streamline content consumption by summarising videos through key visual and audio element extraction, enhancing content browsing and management platforms.

DALL–E-Text–to–Image Generation: DALL–E generates images from textual descriptions, expanding creative possibilities in art, design, and advertising.

Medical Diagnosis: Multimodal models aid medical image analysis by combining data from various sources, assisting healthcare professionals in accurate diagnoses and treatment planning.

Autonomous Vehicles: These models process data from sensors, cameras, and GPS to navigate and make real-time driving decisions in autonomous vehicles, contributing to safe and reliable self–driving technology.

Educational Tools & Virtual Assistants: Multimodal models enhance learning experiences and power virtual assistants by providing interactive educational content, understanding voice commands, and processing visual data for comprehensive user interactions.

To the best of our knowledge, it isn’t here yet, but a time will come soon whereby permissions can be given to an ecommerce store to capture data via your smartphone or another domestic source, to enhance and enrich the data held about each consumer, for sharpening the focus of that individual’s imminent purchase requirements before they do.

Challenges in Multimodal Learning

Heterogeneity: Integrating diverse data formats and distributions from different modalities requires careful handling to ensure compatibility.

-Feature Fusion: Effectively combining features from various modalities poses a challenge, requiring optimal fusion mechanisms.

-Semantic Alignment: Achieving meaningful alignment between modalities is essential for capturing accurate correlations.

-Data Scarcity: Obtaining labelled data for multimodal tasks, especially in specialised domains, can be challenging and costly.

-Computational Complexity: Multimodal models often involve complex architectures and large–scale computations, demanding efficient resource management.

-Evaluation Metrics: Designing appropriate evaluation metrics tailored to multimodal tasks is crucial for assessing performance accurately.

-Interpretability: Enhancing model interpretability is essential for understanding decision–making processes and addressing bias.

-Domain Adaptation: Ensuring robust performance across diverse data distributions and environments requires effective domain adaptation techniques.

Conclusion

These insights into the study of multimodal models provide proof that they are more than just a technological breakthrough—they signify a fundamental change in the field of artificial intelligence. By effortlessly combining information from different types of data, these models not only improve the functions of AI systems but also introduce new opportunities in various fields.

Nonetheless, as we welcome the potential of these models, we must also recognise the obstacles they present. Addressing these challenges will demand teamwork from researchers, engineers, and participants from different sectors. However, within these obstacles are endless chances to innovate, create, and change how we engage with technology and our environment. Looking ahead, let’s seize the potential of multimodal AI and keep striving to expand the limits of what’s achievable.