Machine learning models are prone to overfitting and underfitting when training. Regularisation is used to correct the model so that it correctly fits the test set. Regularisation helps to reduce the risk of overfitting and ensures that the model performs well.

What Is Regularisation in Machine Learning?

Regularisation is a technique used in machine learning to prevent a model from being overfitted. Overfitting occurs when the model not only recognises the natural pattern in the training data but also includes the noise, which can lead to poor performance on new, unseen data. The use of regularisation helps to prevent this problem by increasing the penalty for the loss function used in model training. Here are the main things about regularisation:

1. Goal of regularisation: The main objective is to simplify the model to make it easier to apply to new data sets, thereby improving its performance on invisible data sets.

2. Methods: There are several types of regularisation techniques commonly used:

i. Lasso: This adds a correction equal to the absolute size of the magnitude of the coefficients. This can cause some of the coefficients to be zero, meaning that the model will ignore the corresponding features. This is useful for selecting features.

ii. In L2 Regularisation, the penalty is the square root of the size of the coefficients. In L1 Regularisation, all the coefficients are reduced by the same penalty, but none are eliminated.

iii. This is known as an “elastic net” and is a combination of an L1 and an L2 standardisation. The model is controlled by the addition of penalties from both the L1 and the L2 standardisation, which is a good compromise.

3. Impact on Loss Function: Regularisation modifies the loss function by adding a regularisation term.

4. Selecting the right regularisation parameter: The parameter λ is very important. It is usually selected through cross-validation to ensure that the training data is well-fitted and the model is simple enough to work well with new data.

How Does Regularisation Work?

1. Modifying the Loss Function

In the regularisation process, the loss function is changed. The normalised loss function includes the original loss, an assessment of the model’s fit to the training data and a standardised term that limits the number of parameter increases. The standardised loss function looks like this:

Regularised Loss=Original Loss+λ×Penalty

Here, λ (lambda) is the regularisation strength, which controls the trade-off between fitting the data well and keeping the model parameters small.

2. Types of Penalties

i. Lasso is a type of regularisation where the penalty is equal to the absolute value of the parameter values. This can result in a sparse model with some parameter values being exactly zero, meaning that the model is missing these features.

ii. The penalty is equal to the square of the parameters squared. This means that the penalty is distributed evenly across all parameters, reducing them to zero but not making them exactly zero. L2 Regularised (Ridge)

iii. What is an Elastic Net? An Elastic Net is a combination of an L1 penalty and an L2 penalty. This is useful if there are correlations between features or if you want to combine L1 feature selection properties with L2 feature shrinkage properties.

3. Effect on Training

During training, the regularisation term influences the updates made to the model parameters:

- Minimising a larger penalty term (due to larger values of λ) emphasises smaller model parameters, leading to simpler models that might generalise better but could underfit the training data.

- Minimising a smaller penalty term (lower values of λ) allows the model to fit the training data more closely, possibly at the expense of increased complexity and overfitting.

4. Balancing Overfitting and Underfitting

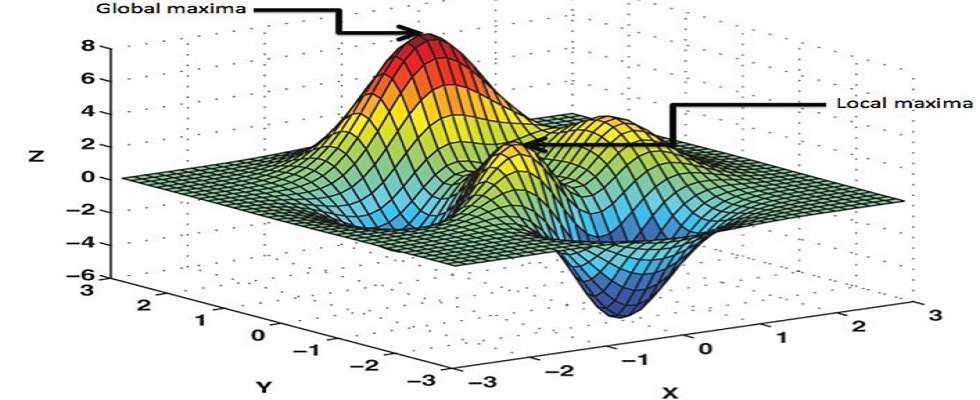

Choosing the right value of λ is crucial:

- Too high a value can make the model too simple and fail to capture important patterns in the data (underfitting).

- Too low a value might not sufficiently penalise large coefficients, leading to a model that captures too much noise from the training data (overfitting).

5. Practical Implementation

In practice, the best λ value and regularisation type (such as L1, L2 or Elastic Net) is often chosen by cross-validation. Cross-validation involves training multiple models with different λ values and possibly different regularisation types. The best model for a given validation set or cross-validation is then selected.

Roles of Regularisation

In machine learning, regularisation plays a key role in the development and execution of models. Its primary goals are to manage model complexity, improve generalisation to novel data sets, and resolve particular problems such as multi-collinearity and feature selectivity. Here are some of the main tasks that regularisation performs:

- Preventing Overfitting: Regularisation’s most significant role is to prevent overfitting, a common issue in which a model learns the underlying pattern and noise in the training data. This usually results in high performance on the training set but poor performance on unseen data. Regularisation reduces overfitting by penalizing larger weights, encouraging the model to prioritise simpler hypotheses.

- Improving Model Generalisation: Regularisation helps ensure the model performs well on the training and new, unseen data by constraining its complexity. A well-regularised model will likely capture the data’s underlying trends rather than the training set’s specific details and noise.

- Handling Multi-collinearity: Regularisation is particularly useful in scenarios where features are highly correlated (multicollinearity). L2 regularisation (Ridge) can reduce the variance of the coefficient estimates, which are otherwise inflated due to multicollinearity. This stabilisation makes the model’s predictions more reliable.

- Feature Selection: L1 regularisation (Lasso) encourages sparsity in the model coefficients. By penalising the absolute value of the coefficients, Lasso can shrink some of them to exactly zero, effectively selecting a smaller subset of the available features. This can be extremely useful in scenarios with high-dimensional data where feature selection is necessary to improve model interpretability and efficiency.

- Improving Robustness to Noise: Regularisation makes the model less sensitive to the idiosyncrasies of the training data. This includes noise and outliers, as the penalty discourages fitting them too closely. Consequently, the model focuses more on the robust features that are more generally applicable, enhancing its robustness.

- Trading Bias for Variance: Regularisation introduces bias into the model (assuming that smaller weights are preferable). However, it reduces variance by preventing the model from fitting too closely to the training data. This trade-off is beneficial when the unconstrained model is highly complex and prone to overfitting.

- Enabling the Use of More Complex Models: Regularisation sometimes allows practitioners to use more complex models than they otherwise could. For example, regularisation techniques like dropout can be used in neural networks to train deep networks without overfitting, as they help prevent neuron co-adaptation.

- Aiding in Convergence: For models trained using iterative optimisation techniques (like gradient descent), regularisation can help ensure smoother and more reliable convergence. This is especially true for problems that are ill-posed or poorly conditioned without regularisation.

Techniques of Regularisation (Effects)

Regularisation is a critical technique in machine learning to reduce overfitting, enhance model generalisation, and manage model complexity. Several regularisation techniques are used across different types of models. Here are some of the most common and effective regularisation techniques:

- L1 Regularisation (Lasso): Encourages sparsity in the model parameters. Some coefficients can shrink to zero, effectively performing feature selection.

- L2 Regularisation (Ridge): It shrinks the coefficients evenly but does not necessarily bring them to zero. It helps with multicollinearity and model stability.

- Elastic Net: This is useful when there are correlations among features or to balance feature selection with coefficient shrinkage.

- Dropout: Results in a network that is robust and less likely to overfit, as it has to learn more robust features from the data that aren’t reliant on any small set of neurons.

- Early Stopping: Prevents overfitting by not allowing the training to continue too long. It is a straightforward and often very effective form of regularisation.

- Batch Normalisation: Reduces the need for other forms of regularisation and can sometimes eliminate the need for dropout.

- Weight Constraint: This constraint ensures that the weights do not grow too large, which can help prevent overfitting and improve the model’s generalisation.

- Data Augmentation: Although not a direct form of regularisation in a mathematical sense, it acts like one by artificially increasing the size of the training set, which helps the model generalise better.

What Are Overfitting and Underfitting?

Overfitting

Overfitting happens when a model gets too caught up in the nuances and random fluctuations of the training data to the point where its ability to perform well on new, unseen data suffers. Essentially, the model becomes overly intricate, grasping at patterns that don’t hold up when applied to different datasets.

Characteristics:

- High accuracy on training data but poor accuracy on validation or test data.

- The model has learned the training data’s underlying structure and random fluctuations.

- Often occurs when the model is too complex relative to the amount and noisiness of the input data.

Common Causes:

- Too many parameters in the model (high complexity).

- Too little training data.

- Insufficient use of regularisation.

- Training for too many epochs or without early stopping.

Mitigation Strategies:

- Simplify the model by reducing the number of parameters or using a less complex model.

- Increase training data.

- Use regularisation techniques like L1, L2, and dropout.

- Employ techniques like cross-validation to ensure the model performs well on unseen data.

- Implement early stopping during training.

Underfitting

Underfitting arises when a model lacks the complexity to capture the underlying patterns within the data. Consequently, it inadequately fits the training data, leading to subpar performance when applied to new data.

Characteristics:

- Poor performance on both the training and testing datasets.

- The model is too simple and does not capture the basic trends in the data.

Common Causes:

- The model is too simple and has very few parameters.

- Features used in the model do not adequately capture the complexities of the data.

- Excessive use of regularisation (too strong a penalty for model complexity).

Mitigation Strategies:

- Increase the complexity of the model by using more parameters or choosing a more sophisticated model.

- Feature engineering: Create more features or use different techniques to extract and select relevant features.

- Reduce the regularisation force if the model is overly penalised.

- Ensure the model is properly trained and tweak training parameters like the number of epochs or learning rate.

Balancing Act

Finding the balance between overfitting and underfitting is key to developing effective machine learning models. It involves choosing the right model complexity, adequately preparing the data, selecting suitable features, and tuning the training process (including regularisation and other parameters). The aim is to build a model that generalises well to new, unseen datasets while maintaining good performance on the training data.

What Are Bias and Variance?

Bias and variance are two fundamental concepts that describe different types of errors in predictive models in machine learning and statistics. Understanding bias and variance is crucial for diagnosing model performance issues and navigating the trade-offs between underfitting and overfitting.

Bias

Bias in machine learning arises when a simplified model fails to capture the complexities of a real-world problem. This oversight can lead to underfitting, where the algorithm overlooks important relationships between input features and target outputs.

Characteristics:

- Bias is the difference between our model’s expected (or average) prediction and the correct value we try to predict. Models with high bias pay little attention to the training data and oversimplify the model, often leading to underfitting.

- High bias can lead to a model that is too simple and does not capture the complexity of the data.

Variance

Variance refers to the amount by which the model’s predictions would change if we estimated it using a different training data set. Essentially, variance indicates how much the model’s predictions are spread out from the average prediction. Excessive variability can lead an algorithm to mimic the random fluctuations in the training data instead of focusing on the desired outcomes, resulting in overfitting.

Characteristics:

- Variance quantifies the extent to which predictions for a specific point fluctuate across various model instances.

- Elevated variance may cause the model to capture the noise within the training data instead of the desired outcomes, thereby causing subpar performance when applied to unseen data.

Bias Variance Tradeoff

The relationship between bias and variance is referred to as the bias-variance trade-off. Minimising both bias and variance is ideal:

- High Bias, Low Variance: The models are consistent but inaccurate on average, typical of overly simplified models.

- Low Bias, High Variance: Models are accurate on average but inconsistent across different datasets. This is typical of overly complex models.

- Low Bias, Low Variance: Models are accurate and consistent on training and new data, indicating a good balance between model complexity and performance on unseen data.

- High Bias, High Variance: Models are inaccurate and inconsistent, performing poorly in training and on new data.

Balancing the Trade-off:

- Underfitting: Occurs when the model is too simple, characterised by low variance and high bias.

- Overfitting: Occurs when the model is too complex, characterised by high variance and low bias.

Conclusion

Regularisation is a fundamental skill for any AI engineer who wants to create high-performance, high-efficiency, and large-scale machine learning algorithms. Learning and applying different regularisation techniques like L1 and L2 regularisation, Elastic Net regularisation, Dropout regularisation, and more will improve the performance of your models and enhance your understanding of the fundamental principles of machine learning. Regularisation can be used to address issues such as overfitting or underfitting, as well as improve model stability.